Third-party content are parasites. Friendly ones, with – most of the time – good intentions. But parasites anyway. Let’s discover some useful tips and techniques to deal with Third Party Content and their impacts regarding your website performance and security.

Why do we need to look after third parties?

Third Party content are assets on which you basically don’t have much control, as they are provided and hosted by a third-party.

Social networks widgets, advertising and tracking scripts or web fonts providers are some widespread third-parties you’re used to deal with.

Whatever they are free or not, these providers allow websites owners and developers to enrich web pages and web usages. Frequently, but not always, some features we just can’t provide by ourselves (even if technically feasible, the related cost might be blocking).

- In 2012, thousands of websites have faced major slowdowns… due to Facebook’s “Like” widget (Forbes’s article)

- More recently, you may remind about Typekit’s font serving outage Third-Party assets are more and more used on our websites (take a look at the evolution of the average number of domains used by a web page) and we do not pay due attention to this topic. Here’s some evidence: (related to Amazon S3 outage), here again with thousands of websites affected (Post Mortem on Typekit’s blog)

- A lighter and funnier example might be DailyMotion broadcasting a job offer last year within the developer consoles of web browsers. The job offer has been broadcasted a few days long on *all* websites using their widgets!

(The message that was displayed within your console while browsing any website using a DailyMotion embed video widget)

If you don’t pay attention, a third-party can affect your website in many ways, as far as making it unavailable for your visitors!

Still, you’re probably not the only stakeholder when it comes to use or not a third-party. However, you definitively have to go further than just reading and following the provider’s documentation.

I like to consider third-party content as parasites. Friendly ones, with – most of the time – good intentions. But parasites anyway, and a growing infection may take down your whole website.

Through this post, I aim to share my vision and experience about third-party content, but most of all to bring you some best practices and tool to mitigate the risks related to third-party content integration.

Speed and third-party content, a complicated story.

Within this first part, we’ll focus on web performance and third party content effects on speed.

This is an important topic for several reasons. Let’s start with the basics. First of all, we have to note that an external asset is loaded from another domain name than the one used for your website. It means that the web browser has to trigger a DNS resolution and next to establish a new TCP connection.

At this early stage, we have to question the third-party server location: if the provider does not use a CDN (Content Delivery Network), it might result in a high latency over the network (as latency is related to the nature of the network, but also the distance between the server and the web browser).

If your website targets a particular country, you may not be familiar with this issue, as you’re probably hosting your website in this very same country. Using a third-party provider without a CDN might result in loading an asset hosted halfway around the world.

But that’s not all. If the request is benefiting from a security layer thanks to HTTPs (and it should) you’ll have to add an extra delay, as HTTPs usage implies additional exchanges to establish the secured connection.

Finally, you’ll be dependant on the server response time and upstream bandwidth of the third-party provider.

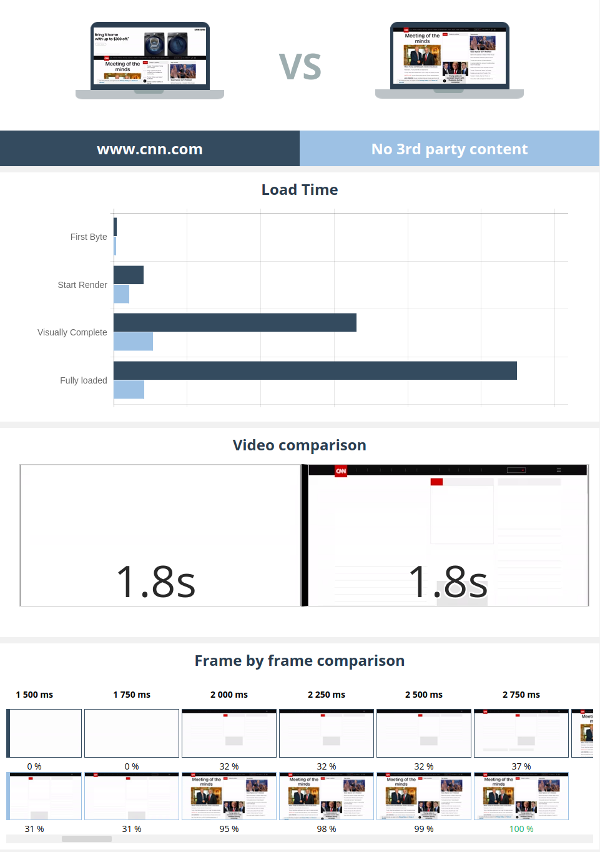

Some website speed test tools (give a try to ours, Dareboost!) might help you to understand the impact of third-party content on your website performance. For example, here’s a comparison report of cnn.com both with and without their third-party content. Guess which version is faster?

As stated in introduction, you don’t have control over the performance of third party providers. You could still establish an SLA (Service Level Agreement), but most of the time we can’t – except if running a major website with big money.

Third party content can be a great way to enrich a website, but they come with important impacts. That’s why you have to limit as much as possible this kind of dependencies.

You don’t have to load the whole framework of a social network to display a simple share button. Implementing your own is easy. Imagine your own implementation, and even if in some some ways it is more limited, it will definitively be a win at the end.

Obviously, this example is a trivial one, and there may be some third-party content you just can’t do without. Then you’ll need to be very cautious in your integration.

Exclude third party from critical render path

Displaying a web page requires a lot of operations from the browser: receiving the HTML source code, fetching some assets, building the DOM, CSS tree.

Some of these operations are blocking, meaning they need to terminate before allowing any display. A well-known example is Javascript called synchronously within the <head> of a web page.

Performance optimization best practices are often focusing on the critical rendering path, in other words on elements and operations impactful for the above the fold part of the page.

Given the different delays added by the use of third party content, it’s really important to avoid using any of them within your critical rendering path. You should be in search of absolute control of the early display of the page.

Both Facebook and Typekits examples discussed earlier illustrate perfectly what are the stakes of having third party content blocking your critical rendering path. Dependencies that slow down your page loading in the best case scenario. At worst, it might result in website unavailability if they were to fail.

A dependency failure resulting in such a breakdown is so called a Single Point of Failure. A notion that front-end developers might not be so familiar with.

“A single point of failure (SPOF) is a part of a system that, if it fails, will stop the entire system from working.” – Wikipedia

- At this stage, most of the time I can hear some people telling me: “Yeah, ok, my website has some SPOFs. But that’s Google Fonts. I mean GOOGLE. I can trust them for 100% uptime” or whatever big players they are confident about.

Short answer: they’re wrong. Pick-up the argument you like the most:

There’s no bullet-proof big player. Our introduction was about Facebook and Typekit (related to Amazon S3 failure), right? It will happen one day. - A SPOF is not triggered only in case of failure of the third-party! A firewall or a network error/congestion might also result in a requested file not having a response!

- When your website is not loading, from the visitors perspective, it is your fault. Not Google’s one. Real people don’t have any idea of what’s required to display your page! You can trust them, but you alone will face the consequences.

I may sound a little bit alarmist here. SPOF is a risk, and you should consider it in terms of probability of occurrence and related income loss against cost to be fixed. It’s your personal blog? You can totally trust Google and keep going with your SPOF!

Of course, if you’re using a third-party, that’s because it achieves something for your needs. Having a third-party to fail will then result in a missing feature. Most of the time, your website can – and should – work without this feature. If you’re doing business online for real, you need to eliminate SPOFs by all means!

Unfortunately, a blocking behaviour might be required by some third-party services. For example, some A/B Testing solutions specifically advise to use a blocking integration. Indeed, they wish to avoid flickering (the original page should not be shown before execution of the A/B testing edits). Yes, it’s nothing less than slowing down the page rendering, but on purpose.

Anyway, you can still avoid that being a SPOF.

A blocking javascript file, when being too slow to load, will be aborted by the browser. However, the default timeout policies of web browsers are not a good fit for non critical resources (you would rather miss some A/B Test sessions than having some customers waiting 30 seconds for a page to render). Default timeouts vary from a couple seconds to… minutes (there’s a great post from Steve Souders if you want to know more about it).

Knowing that, you should take control over the default timeout policy if you need a blocking third-party asset. Consider the feature value for you and your user, configure the appropriate timeout, and you can even add a fallback!

Check this post from Typekit, that have updated their docs since the 2015’s breakdown.

Last point about 3rd parties and speed: Tag Managers. You probably have integrated Google Tag Manager on some of your projects, allowing to centralize third-party calls, and bringing a whole new level of autonomy for non tech people to add new services… Good news is GTM is loaded asynchronously by default and same goes for subsequent tags.

“With great power comes great responsibility”

Tag Managers often answer needs of people without a technical culture, and who won’t be vigilant about the impacts of such injected content. Risks for load time, but also for security as we will discover later. Offering a Tag Manager means giving power to its users, and your job is probably to let them know about the implications, or to set up a validation workflow.

Quality Audit for your 3rd parties

As previously stated, most of the cases we just can’t discuss an SLA with the provider. Anyway, there’s probably available alternatives on the market for what you need, and you should probably add quality and performance as factors considered to choose a provider.

I remind having considered a click2chat Saas provider for our own website. While quickly checking the loaded assets, I saw an image file that was about 250KB. Optimizing this icon would have resulted in a 100x lighter file to be loaded.

The chat was loaded asynchronously, so not a big deal. But still, a slow image to be loaded for low bandwidth connections. And more important: it was pointing out that image optimization was not a part of their workflow. Image could as well have been 2MB.

Performance Budget is a very interesting method to make sure your website remains fast. It can be a great way to make sure to keep control over third-party and their evolutions through time thanks to website monitoring.

To audit the quality of a third-party, Simon Hearne has done a great work for you with a checklist you can use to make sure you’re considering all major impacts.

When finding problems or any potential optimization, feel free to get in touch with the provider. Having an editor to fix it will probably benefit thousands of websites! Knowing how the provider is dealing with your demand will also be a great insight.

Mitigate the risks related to 3rd parties

Adding a third-party to a web page implies a high level of trust for the provider, as you’re losing some control. Applying previous recommendations, you made sure that the provider is qualitative, and that a failure won’t result in your website being unreachable.

Still, the third-party have gained control over your page! You can’t blindly trust him. Even if the provider has the best intentions, it can still do mistakes, or even be hacked. Let’s speak about security.

First of all: HTTPs is a requirement. If your website is using the secure version of the protocol, you just can’t have 3rd parties working over HTTP (that would be Mixed Content, something web browsers are blocking automatically).

You need to go further regarding the security, by adding restrictions to 3rd party content scope. For example, you can use the sandbox attribute over iframes. It will allow you to restrict the actions available from an iframe (for example, forbid Javascript usage).

Another – very powerful – tool: Content Security Policy. By using a simple HTTP header in your server’s response, you can explicitly define content and behaviours you trust within your page. Whatever is the element not being allowed, the browser won’t execute/request it. Assuming of course that the web browser supports CSP.

If your website is vulnerable to some Cross-Site Scripting (XSS), defining a Content Security Policy won’t solve the vulnerability. Nevertheless, it will protect your users against the effects of an XSS attack.

Example: By using a form within your page, an attacker succeeds in injecting a javascript file in your page, let’s call it malicious.js. The script tag requesting malicious.js is indeed in your page. However web browsers won’t actually request the file, instead they will throw an error, as this request violates your Content Security Policy directives. XSS vulnerability has been exploited, but without having effects on your visitors.

That’s a good way to make sure your third-parties do not allow themselves to do more than they should with your pages. And if a provider was to be hacked, you would have mitigates the risks for your own traffic.

Last but not least, protect your cookies! A third-party injecting Javascript within your document can access the cookies related to your domain through Javascript! Setting cookies with an HttpOnly flag, you’ll forbid any client-side usage!

Your turn!

Third-parties usage grows. Pay attention to the related risks and limit as much as possible their number. Audit those you need, and choose wisely among offered alternatives.

Keep in mind they are parasites, and they will evolves: add as much constraints as possible and monitor them!