After our articles about Test My Site and Page Speed Insights, our collection dedicated to the Google Speed Update ecosystem continues with another tool: Lighthouse.

Released in 2016 as a Chrome extension, Lighthouse is now also available directly in Chrome DevTools, via the “Audits” tab. Lighthouse is a great resource for developers interested in web performance and quality.

Lighthouse, a quick overview

Auditing a web page with Lighthouse is very simple if you are familiar with the Chrome DevTools. Browse to the page with Chrome, open DevTools (Ctrl+Shift+i or ⌥+⌘+i depending on your system) and then go to the “Audits” section.

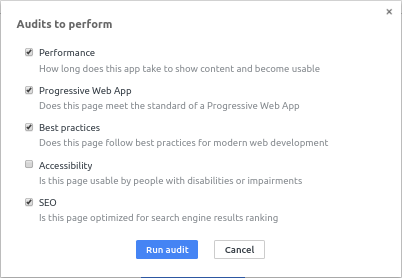

Clicking “Perform an audit” will then allow you to configure the level of the audit according to your interests (Performance, SEO, Accessibility, etc.).

You will be able to see the page loading and reloading, and after a while, a new window will display your audit report.

If your version of Chrome is less than 69 (the current version at the time of writing this article is 67), this manipulation will trigger Lighthouse 2. You can use the Lighthouse extension available on the Chrome Store to test with Lighthouse 3. During this test, we used the extension. What follows, therefore, refers to Lighthouse 3.

NB: During our test using Lighthouse 3.0.0-beta.0, the screenshots were not fitting the expected viewport, probably affecting the Speed Index computation.

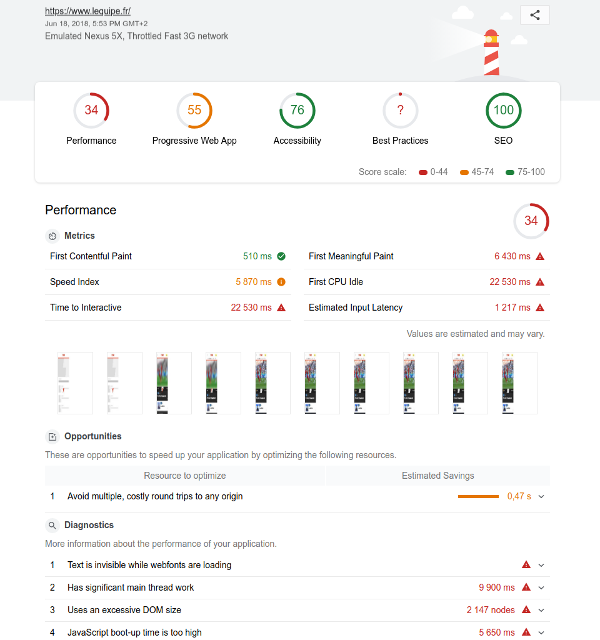

When Lighthouse has completed the evaluation of your page, you’re provided with an audit report, that begins by several scores (as many scores as categories chosen during the audit configuration).

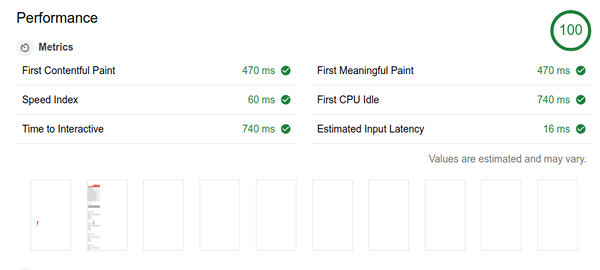

The Performance Score is computed from your speed test results, comparing your website speed against others. Getting a 100 score means that the tested webpage is faster than 98% or more of web pages. A score of 50 means the page is faster than 75% of the web. [Source]

Other scores are depending on the compliance of the page against related best practices (you may note a “Best Practices” category: the name is kind of misleading as other categories are also offering some best practices, “Miscellaneous” may have been a better fit).

In our example, a question mark is displayed instead of a score. You can get this behavior when some related tests have not been conducted properly and are marked as “Error!”.

After the scores overview, you’ll find the performance results for 6 metrics, and tooltips are available for a quick explanation:

- First ContentFul Paint: First contentful paint marks the time at which the first text/image is painted.

- First Meaningful Paint: First Meaningful Paint measures when the primary content of a page is visible.

- Speed Index: Speed Index shows how quickly the contents of a page are visibly populated.

- First CPU Idle: First CPU Idle marks the first time at which the page’s main thread is quiet enough to handle input.

- Time to Interactive: Interactive marks the time at which the page is fully interactive.

- Estimated Input Latency: the score above is an estimate of how long your app takes to respond to user input, in milliseconds, during the busiest 5s window of page load. If your latency is higher than 50 ms, users may perceive your app as laggy.

Please note than some of those metrics are still at a very early stage. For example, as stated in its initial specification: First Meaningful Paint [..] matches user-perceived first meaningful paint in 77% of 198 pages. Collected metrics have significantly changed between Lighthouse V2 and V3. We will detail this in our next article. Still, if you’re eager to know, you can check the update announcement.

In the report, you’ll next find a filmstrip: step by step images of the page loading. That’s particularly useful to make sure the page has loaded as expected. For example, during our benchmark, we got a report with discrepancies. We have been able to confirm something went wrong thanks to the filmstrip:

Unfortunately, we were not able to find out more about what went wrong. One may regret the lack of details when using Lighthouse for complex work. Without an access to the page load waterfall, you cannot figure out more deeply what happened here.

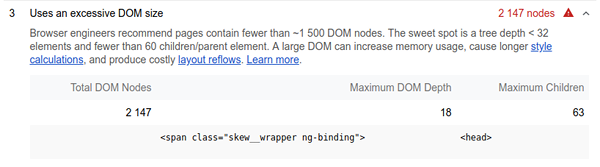

After the performance overview, you’ll be provided with the best practices for each category. Most of the tips are very technical and not extensively detailed in the report itself, but you’ll find very valuable resources under the “Learn more” links.

What makes Lighthouse a great audit tool is also the number of automated controls: about a hundred. Lighthouse also highlights some “Additional items to manually check” that will be precious reminders (for instance in the accessibility category “The page has a logical tab order”).

Note that some best practices are duplicated within several categories, for example, the control related to mixed content is present in the “Progressive Web App” category as well as in “Best Practices”.

With Great Power Comes Great Responsibilities

Lighthouse is definitely a great resource, for both performance metrics and quality controls it can provide, always on hand as it’s available directly in Chrome.

This last advantage may also be your worst enemy!

When conducting the performance test from your desktop computer, you’re relying on a lot of parameters from your local environment and it can be difficult to get stable enough results:

- Internet connection: are you sure you don’t have any background application consuming some bandwidth? If you’re sharing the connection with others, are you sure nobody is paraziting your tests? If your Internet Service Provider offering a connection stable enough?

- CPU: are you sure your other computer usages are not affecting your running test?

- Chrome extensions: as pointed out by Aymen Loukil they can deeply affect your results. Be particularly careful about extensions related to ad blocking!

- User state: are you sure that your Lighouse test is starting with a clean state? What about your cookies, the state of your Local Storage, the open sockets (you can check on chrome://net-internals/#sockets), etc.

- Lighthouse version: Lighthouse may have been updated since your last report, have you checked the changelog? Beware that the extension is auto-updating by default, and the version available through DevTools will be updated when updating Chrome.

The two first points are handled by the latest Lighthouse version (3.0), and a new mode “Simulate throttling for performance audits (faster)“. Rather than relying on Chrome DevTools for traffic shaping, Lighthouse is now using a new internal auditing engine: Lantern. It aims to reduce performance metrics variability without losing too much accuracy. The approach is very interesting. For further details a public overview as well as a detailed analysis are available. Let’s see in the future how reliable it can be at scale!

Whatever the Lighthouse version you’re using, keep in mind the impact of your local environment and conditions.

Dareboost, as a synthetic monitoring solution is particularly aware of these stakes. For every single test on Dareboost, we’re creating a new clean Chrome user profile and opening a new Chrome instance. Each of our test regions are using the exact same infrastructure and network conditions. We do not parallelize tests on our virtual machines to avoid any interdependencies or bottlenecks.

If you’re not convinced yet that a dedicated environment is required to run your performance tests, we have run a small experiment.

We have conducted 3 Lighthouse audits on Apache.org from our office (fiber connection – average ping value to Apache.org is 40ms). Here are the median values of Lighthouse results:

| Performance Score | First Contentful Paint | Speed Index | Time to Interactive |

|---|---|---|---|

| 85 | 1690 | 1730 | 5380 |

Same exercise, but throtthling our connection to add 500ms latency (using tc Unix command):

| Performance Score | First Contentful Paint | Speed Index | Time to Interactive |

|---|---|---|---|

| 67 | 2780 | 3880 | 7320 |

We’re getting a 21% variation on Performance Score while the Speed Index has more than doubled with the second context. And we’re getting those results using Lantern mode (emulated Lighthouse throttling) that actually aims to mitigate local network variability.

Your own network latency is hopefully not varying this much. But keep in mind that the latency is only one of a lot of variable parameters in your local environment!

If you have long-term needs of performance testing, feel free to discover our Pro Plans. For temporary needs, Dareboost also offers a free version to benefit from a stable and reliable environment, with up to 5 performance test per month. Give it a try!

When using Lighthouse, if you wish to compare several reports, keep in mind the volatility of your context and related bias!

Some extra Pro tips

- Lighthouse is an Open Source project available on a Github repository. You can dig into the code to know more and the bug tracker will be handful.

- The tool is also executable with a command-line interface.

- You can explore Lighthouse results at the scale of the web by using HTTP Archive collecting data on thousands of pages.

- If you’re using Lighthouse v2 (throttled connection rather than the Lantern emulation), keep in mind you’re using Chrome DevTools traffic shaping ability. Latency is injected at HTTP level, unlike Dareboost or WebPageTest that inject it at TCP level. As a result, Chrome DevTools is not adding latency during TCP connection neither SSL handshakes.

To conclude, Lighthouse is a useful and promising tool. Like the two other Google tools previously tested, it could give clues about Google’s strategy with the Speed Update. Let’s discuss that in our next article. Subscribe our Newsletter so you don’t miss it.

We’ll see you soon for a new episode of our series on Google tools to prepare the Speed Update!

when i am running my page with light house i am only able to get light house report for first page only.

and when i am doing any action i am not getting any report for so how we can get report for subsequent pages.

With Dareboost, you can use our User Journey Monitoring.